Background

Modern Web Apps (such as Nuxt.js, Next.js, SvelteKit, SolidStart, etc., primarily full-stack Node.js applications with database interaction) often require integration with object storage to provide functionality for users to download files directly from the website.

Direct link downloading is a crucial application scenario. It heavily influences the user experience and also incurs storage costs that the website must pay to the object storage provider.

The article presented below will have a significant impact on many developers who build their own websites and manage their own storage. This mature solution is a game-changer for resource download sites (or for anyone planning to build one).

This article is extremely valuable; it is the culmination of best practices for applying modern object storage in the web domain. If you fully understand this article, you can build any resource download website you can imagine, shipping your app fastest and ensuring it's both modern and secure.

Publishing this article requires a great deal of courage, because after its release, all of this will become public knowledge. This might cause some websites to lose traffic or even their competitive edge, and it may lead to the popularization of object storage (because you can genuinely offset storage costs with ad revenue very easily).

If you have any questions about this article, you can reply directly or join our moe Telegram development group: https://t.me/KUNForum

Abstract

#Cloud Drives, #IPFS, #OneDrive, #Self-hosted Home Clouds, #Presigned URLs, #CDN, #Cloudflare, #Backblaze, #S3, #Object Storage

Many object storage services are quite expensive. Some scenarios may involve high bandwidth usage, which necessitates a storage solution that doesn't charge for bandwidth.

After continuous discussions within our development team (https://t.me/KUNForum), we ultimately chose Backblaze (hereinafter referred to as B2) as our provider for S3 Compatible Object Storage.

We are aware of other storage methods like cloud drives, IPFS, OneDrive, and self-hosted home clouds (家里云) , but they come with the following issues:

-

Cloud Drives: Files are easily flagged for policy violations or face rate limiting. Furthermore, users may need to download subpar clients to download files, severely impacting the user experience.

-

IPFS: It is still in development, and we don't want to be the first lamb, so we won't discuss it.

-

OneDrive: The standard cost is relatively low, but with a very large download volume (for instance, if a site reaches 5k daily active users, which is a small number for a website—this is a rough estimate), it will inevitably lead to 429 "Too Many Requests" errors. You can use load balancing with multiple accounts, but it's not convenient.

-

Self-Hosted Home Cloud: It is highly susceptible to being blocked by ISPs, and its upload/download bandwidth is severely insufficient and cannot handle high traffic.

The following article details how to achieve bandwidth-free operations at the deployment level using Cloudflare Workers:

Using Cloudflare Workers to Download Files from a B2 Private Bucket

However, although that article was written, we didn't cover how to implement the upload and download processes at the code level.

Today, we will discuss how to build a modern, high-performance, highly available, cost-effective resource upload and download system with an excellent user experience, based on S3 object storage.

The system design described below is applicable to modern storage-intensive websites, including all resource download websites on the market. If you are planning to build a similar storage-based website, the following content may be very useful to you.

Preflights

Before we begin, I need to mention a previous article I wrote:

Tutorial on Configuring Bucket Information Using the Backblaze Command-Line Tool

This is also a very valuable article. It explains how to use the b2 CLI to manage various properties of a private bucket.

Note that we recommend your bucket should always be Private. Never make your bucket Public, just as configured in the article above. Additionally, for B2, the "hide file" feature is completely redundant for our purposes (according to the documentation, only B2's Native API allows for immediate file deletion instead of hiding, but once you use the B2 Native API, migrating from B2 to another storage provider in the future would be an excruciating process).

Transaction Billing

While B2 is inexpensive (in our current state, we pay about $6/month), this low cost comes at the price of some API operations not being fully fleshed out, as mentioned in the article above.

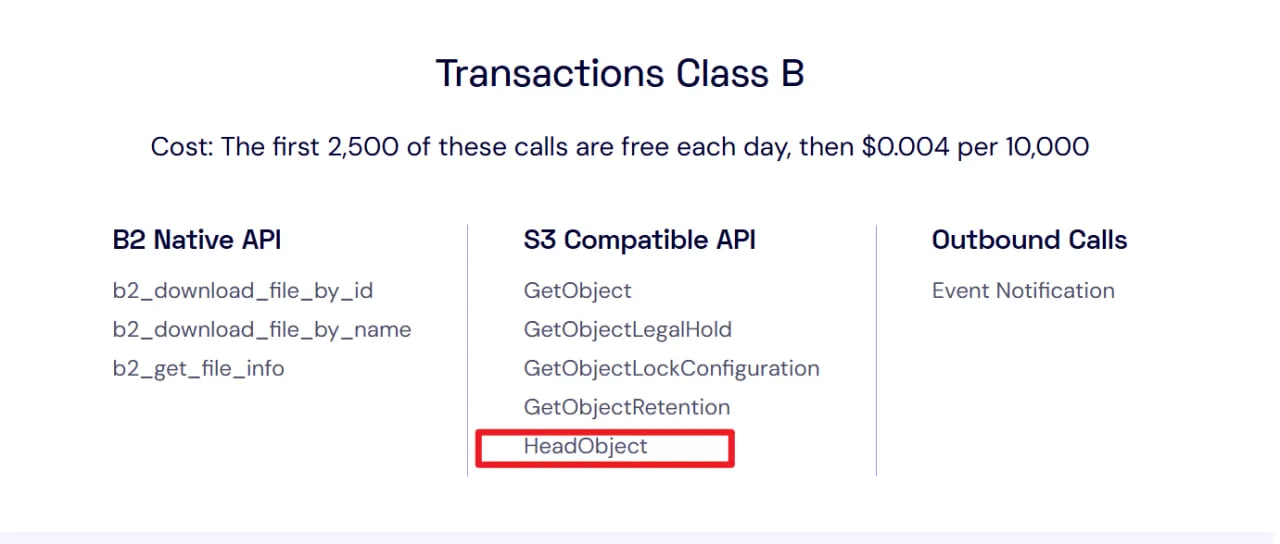

Of all the S3 Commands we will use below, only HeadObjectCommand is a Class B transaction in Backblaze; the rest are Class A (free) operations.

This transaction billing model applies only to Backblaze. The billing rules for other S3 Compatible Object Storage providers may differ.

This transaction billing model applies only to Backblaze. The billing rules for other S3 Compatible Object Storage providers may differ.

It's worth noting that GetObjectCommand is also a Class B transaction, which means although the cost is low, it is still billed.

So if someone is spamming your download endpoint with max threads, it's still possible to fuck up a massive bill. (如果有 mjj 开着满线程刷你的下载接口,那也还是有可能把你家房刷没的)

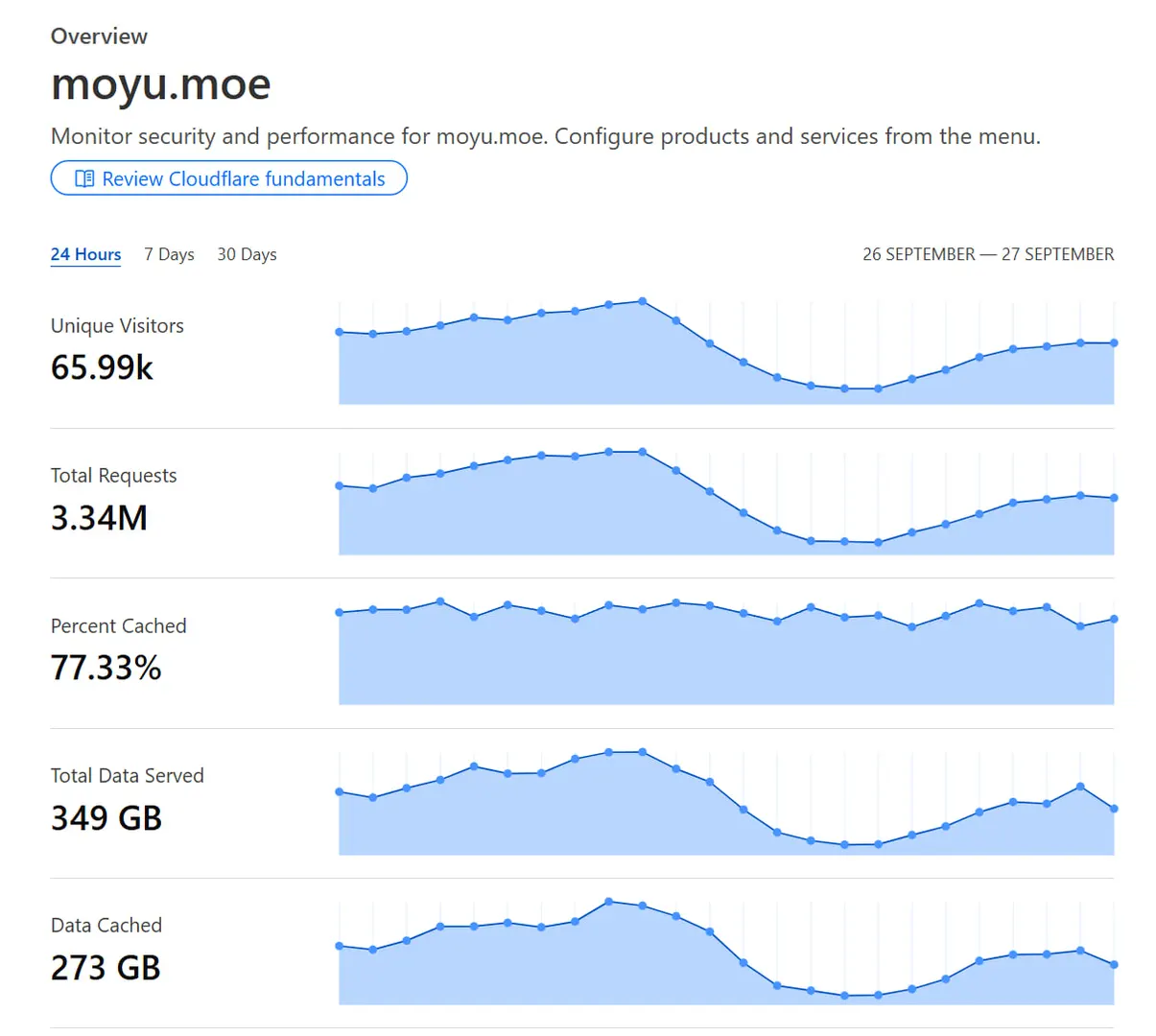

Below are the traffic stats for this website's patch site, moyu.moe, on 26/09.

You can see that Cloudflare reports an absurd statistic of nearly 70k daily active users. In reality, according to genuine statistics from Umami, this number is just over 25k.

You can see that Cloudflare reports an absurd statistic of nearly 70k daily active users. In reality, according to genuine statistics from Umami, this number is just over 25k.

This means that two-thirds of all "users" are crawlers or scripts, and these will all consume your GetObjectCommand quota (even though GetObjectCommand is very cheap).

Anti-Scraping Tutorial

Here is a tutorial on how to configure this using Cloudflare CDN + Cloudflare Workers:

Using Cloudflare Workers to Download Files from a B2 Private Bucket

This article is truly invaluable. Without any knowledge in this area, discovering this solution would be quite difficult. I also received help from the owner of ohmygpt, TouchGal, and several other site owners to arrive at the solution in the article above.

Additionally, B2's S3-related API operations also have some shortcomings. They require a lot of attention to detail to work with. Below, we provide a robust, practical solution, which is the solution currently in use by several websites.

Analysis of the Upload/Download Integration Principle

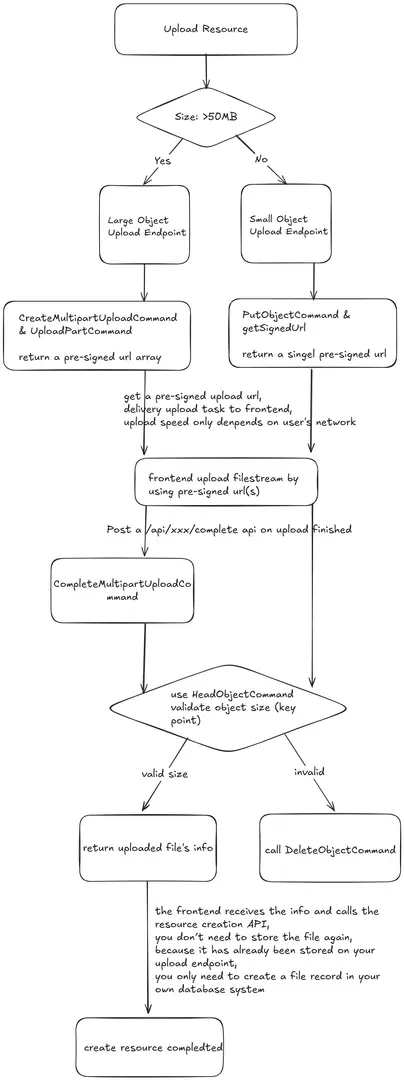

Let's use a diagram to briefly illustrate the process, specifically using Backblaze as the S3 Compatible Object Storage provider (as providers like AWS can achieve the same object operations with simpler commands). You can view the diagram at https://excalraw.com/#json=JZe0C_bUwdTI_oeCbnI8q,9Ku4FAq9kjwnQ2mNgsV5oQ.

I quickly drew this diagram myself, so there might be some omissions. It's best to read the detailed explanation below.

I quickly drew this diagram myself, so there might be some omissions. It's best to read the detailed explanation below.

One thing not shown in the diagram is that this entire process requires the assistance of Redis and cron jobs. This is crucial for strictly preventing spam users.

Now, let's explain the process in detail.

Our forum's current tool resource uploader follows this logic. All screenshots below are based on https://www.kungal.com/edit/toolset/create.

Frontend Validation of User Uploads

First, the user's uploaded file should be validated on the frontend. Our website requires that uploaded files be one of the following types: .zip, .7z, or .rar. This is a very simple validation.

After validation, depending on the size of the user's file, when the user clicks "Upload," the following process will occur.

Obtaining a Presigned URL via a Request

A Presigned URL allows the user to upload a file directly from the frontend to your object storage bucket, without needing to be proxied through your server. The speed depends entirely on the user's own upload bandwidth, not on your server's I/O, which is an excellent feature.

However, this brings a problem: anything on the frontend is untrustworthy and must be validated by the server. Below, we will focus on how to validate this upload.

< 50MB

50MB is a threshold we've set for file size on our website. Files smaller than 50MB are considered small files.

Small files have an upload advantage: they don't require calling a series of complex upload APIs, which can lead to errors.

Here is an example of code for uploading a small file:

import prisma from '~/prisma/prisma'

import { s3 } from '~/lib/s3/client'

import { getSignedUrl } from '@aws-sdk/s3-request-presigner'

import { generateRandomCode } from '~/server/utils/generateRandomCode'

import {

MAX_SMALL_FILE_SIZE,

KUN_VISUAL_NOVEL_UPLOAD_TIMEOUT_LIMIT

} from '~/config/upload'

import { initToolsetUploadSchema } from '~/validations/toolset'

import { parseFileName } from '~/server/utils/upload/parseFileName'

import { saveUploadSalt } from '~/server/utils/upload/saveUploadSalt'

import { canUserUpload } from '~/server/utils/upload/canUserUpload'

import { PutObjectCommand } from '@aws-sdk/client-s3'

import type { ToolsetSmallFileUploadResponse } from '~/types/api/toolset'

export default defineEventHandler(async (event) => {

const body = await readBody(event)

const parsed = initToolsetUploadSchema.safeParse(body)

if (!parsed.success) {

return kunError(event, parsed.error.message)

}

const { toolsetId, filename, filesize } = parsed.data

if (filesize > MAX_SMALL_FILE_SIZE) {

return kunError(event, 'File is too large, please use multipart upload.')

}

const userInfo = await getCookieTokenInfo(event)

if (!userInfo) {

return kunError(event, 'Please log in first.', 205)

}

const toolset = await prisma.galgame_toolset.findUnique({

where: { id: toolsetId },

select: { id: true }

})

if (!toolset) {

return kunError(event, 'Toolset does not exist.')

}

const result = await canUserUpload(userInfo.uid, filesize)

if (typeof result === 'string') {

return kunError(event, result)

}

const { base, ext } = parseFileName(filename)

const salt = generateRandomCode(7)

const key = `toolset/${toolsetId}/${userInfo.uid}_${base}_${salt}.${ext}`

// Some S3 compatible providers do not support createPresignedPost

// const post = await createPresignedPost(s3, {

// Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

// Key: key,

// Conditions: [

// ['content-length-range', filesize, filesize],

// ['eq', '$Content-Type', 'application/octet-stream']

// ],

// Fields: { 'Content-Type': 'application/octet-stream' },

// Expires: 3600

// })

const command = new PutObjectCommand({

Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

Key: key,

ContentType: 'application/octet-stream'

})

const url = await getSignedUrl(s3, command, {

expiresIn: KUN_VISUAL_NOVEL_UPLOAD_TIMEOUT_LIMIT

})

await saveUploadSalt(key, 'small', salt, filesize, base, ext)

const response: ToolsetSmallFileUploadResponse = {

key,

salt,

url

}

return response

})

Normally, we could conveniently use createPresignedPost to create a presigned URL. Unfortunately, some object storage providers (like Backblaze) do not support this method, so we use PutObjectCommand + getSignedUrl to achieve the same effect.

A key part here is using Redis to store information about the file, because various unexpected situations can occur during the upload process.

The file might not be uploaded, some malicious users might upload "fake files" to waste the website's storage, some users might stop uploading halfway through, or some users might upload a file but never publish the resource, turning it into an "orphan file," etc.

Here is the saveUploadSalt function:

export interface UploadSaltCache {

key: string

type: string

salt: string

filesize: number

normalizedFilename: string

fileExtension: string

}

export const saveUploadSalt = async (

key: string,

type: 'small' | 'large',

salt: string,

filesize: number,

normalizedFilename: string,

fileExtension: string

) => {

const storedValue = JSON.stringify({

key,

type,

salt,

filesize,

normalizedFilename,

fileExtension

} satisfies UploadSaltCache)

await useStorage('redis').setItem(`toolset:resource:${salt}`, storedValue)

}

export const getUploadCache = async (salt?: string) => {

const res = await useStorage('redis').getItem(`toolset:resource:${salt}`)

if (!res) {

return

}

// useStorage may auto-parse a stringified object

const parsedResult = JSON.parse(JSON.stringify(res)) as UploadSaltCache

return parsedResult

}

export const removeUploadCache = async (salt: string) => {

await useStorage('redis').removeItem(`toolset:resource:${salt}`)

}

Here, we save some key information to be used later by a cron job to scan for and delete these junk or orphan files. Since the file is uploaded by the user from the frontend, all frontend operations must be rigorously validated.

> 50MB

Files larger than 50MB should use multipart upload. This makes it easy to implement an upload progress bar, preventing users from feeling like "why is my file taking so long to upload?", "when will it finish?", or "is the shit website stuck?".

Like small files, large files must also obtain presigned URLs, and the upload task is then handed off to the frontend, without consuming server I/O.

Here is an example of uploading a large file:

import prisma from '~/prisma/prisma'

import { s3 } from '~/lib/s3/client'

import {

CreateMultipartUploadCommand,

UploadPartCommand

} from '@aws-sdk/client-s3'

import { getSignedUrl } from '@aws-sdk/s3-request-presigner'

import { generateRandomCode } from '~/server/utils/generateRandomCode'

import {

LARGE_FILE_CHUNK_SIZE,

MAX_SMALL_FILE_SIZE,

MAX_LARGE_FILE_SIZE,

KUN_VISUAL_NOVEL_UPLOAD_TIMEOUT_LIMIT

} from '~/config/upload'

import { initToolsetUploadSchema } from '~/validations/toolset'

import { parseFileName } from '~/server/utils/upload/parseFileName'

import { saveUploadSalt } from '~/server/utils/upload/saveUploadSalt'

import { canUserUpload } from '~/server/utils/upload/canUserUpload'

import type { ToolsetLargeFileUploadResponse } from '~/types/api/toolset'

const MB = 1024 * 1024

export default defineEventHandler(async (event) => {

const input = await kunParsePostBody(event, initToolsetUploadSchema)

if (typeof input === 'string') {

return kunError(event, input)

}

const { toolsetId, filename, filesize } = input

if (filesize <= MAX_SMALL_FILE_SIZE) {

return kunError(

event,

`File does not exceed ${MAX_SMALL_FILE_SIZE / MB}MB, please use the small file upload endpoint`

)

}

if (filesize > MAX_LARGE_FILE_SIZE) {

return kunError(event, `Single file max size is ${MAX_LARGE_FILE_SIZE / (MB * 1024)}GB.`)

}

const userInfo = await getCookieTokenInfo(event)

if (!userInfo) {

return kunError(event, 'Please log in first', 205)

}

const toolset = await prisma.galgame_toolset.findUnique({

where: { id: toolsetId },

select: { id: true }

})

if (!toolset) {

return kunError(event, 'Toolset does not exist')

}

const result = await canUserUpload(userInfo.uid, filesize)

if (typeof result === 'string') {

return kunError(event, result)

}

const { base, ext } = parseFileName(filename)

const salt = generateRandomCode(7)

const key = `toolset/${toolsetId}/${userInfo.uid}_${base}_${salt}.${ext}`

const bucket = process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!

const createRes = await s3.send(

new CreateMultipartUploadCommand({

Bucket: bucket,

Key: key,

ContentType: 'application/octet-stream'

})

)

if (!createRes.UploadId) {

return kunError(event, 'Failed to initialize multipart upload, please try again later')

}

const partCount = Math.ceil(filesize / LARGE_FILE_CHUNK_SIZE)

const urls: { partNumber: number; url: string }[] = []

for (let i = 1; i <= partCount; i++) {

const url = await getSignedUrl(

s3,

new UploadPartCommand({

Bucket: bucket,

Key: key,

UploadId: createRes.UploadId,

PartNumber: i

}),

{ expiresIn: KUN_VISUAL_NOVEL_UPLOAD_TIMEOUT_LIMIT }

)

urls.push({ partNumber: i, url })

}

await saveUploadSalt(key, 'large', salt, filesize, base, ext)

const response: ToolsetLargeFileUploadResponse = {

key,

salt,

uploadId: createRes.UploadId,

partSize: LARGE_FILE_CHUNK_SIZE,

urls

}

return response

})

The most distinctive feature of large files compared to small ones is that they return a set of presigned URLs, not just one.

When the frontend uses this set of URLs to upload, it needs to slice the file into parts and upload each part separately using these presigned URLs.

Here, we also save a key-value pair with crucial file information in Redis.

The minimum part size for S3 is 5MB, which is what we have set. It is recommended not to exceed 20MB. Firstly, it provides a poor experience for users with lower upload bandwidth, as they might wait a long time without seeing the progress bar move. Secondly, the resume-from-break-point experience is not good, as more of the file would need to be re-uploaded.

A size of around 10MB is common. Through practice, we've found that having more requests doesn't have any negative impact and actually provides a better experience for the majority of users with smaller bandwidth.

File Key / Download Filename

Here, we've directly set the file key to {User UID}_{Sanitized Filename}_{7 random characters}.

This design actually has some minor issues. It's unwise to have file keys contain characters other than English letters, numbers, and underscores, as this can cause problems in many scenarios.

Generally, all file keys should be named using a pure hash, and you can maintain a file table to store file metadata. This is a more robust practice.

My consideration was to let users know what file they are downloading, rather than a jumble of hash characters, so I kept the filename. However, this was not the wisest choice.

The correct approach is to use content-disposition, as pointed out by members of our development group. For example, the implementation below:

import { s3 } from '~/lib/s3/client'

import { GetObjectCommand } from '@aws-sdk/client-s3'

import { getSignedUrl } from '@aws-sdk/s3-request-presigner'

import { getToolsetDownloadUrlSchema } from '~/validations/toolset'

export default defineEventHandler(async (event) => {

const input = await kunParseGetQuery(event, getToolsetDownloadUrlSchema)

if (typeof input === 'string') {

return kunError(event, input)

}

const fileMeta = await prisma.toolset_file.findUnique({

where: { key: input.true },

select: { originalName: true, mimeType: true }

})

if (!fileMeta) {

return kunError(event, 'File data not found')

}

const bucket = process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!

const fileName = fileMeta.originalName

const encodedName = encodeURIComponent(fileName)

const command = new GetObjectCommand({

Bucket: bucket,

Key: key,

ResponseContentType: fileMeta.mimeType 'application/octet-stream',

ResponseContentDisposition: `attachment; filename="${encodedName}"; filename*=UTF-8''${encodedName}`

})

const url = await getSignedUrl(s3, command, { expiresIn: 3600 })

return { url }

})

Note the following points in the above behavior:

- Never let the browser access the raw S3 key directly; downloads must go through a signed URL.

- It's still best to avoid control characters (like

\n,") in the filename. filename*is for compatibility with browsers handling non-ASCII filenames (especially Chinese characters).

How to Migrate from the Flawed Practice Above

I mentioned that the current key design has problems. After discussions in our development group, migration can be achieved through the following steps:

- Use

RenameObjectCommandto rename the object at the storage layer. - Create a mapping between the key and file metadata in a resource or file table. When querying, you can get the original filename directly from this mapping.

As you can see, migration is also very simple. If your functionality is not very complex, you can use our current implementation.

Presigned Policy Settings

The following policies are detailed suggestions. Please adapt them to your project's actual needs.

Presigned URL Policy

The expiration time for upload signatures (PUT/UploadPart) should be relatively short, recommended between 30 minutes to 1 hour.

If it's too short, most users won't have time to upload (at an average speed of 3 MB/s, uploading just 1GB takes 5 to 10 minutes).

If it's too long, it increases the window for abuse. Imagine a spam user (like someone with a 10 Gbps dedicated server) spamming your upload endpoint for a day with a 100TB file—your house will lose again (例如某位 mjj 开着一个 10 Gbps 的杜甫给你刷上一天的上传,你家房又没了).

The TTL for download signatures is recommended to be around 1 hour, adjusted dynamically based on the scenario (public vs. private resources).

The signature generation logic must be on the backend and only callable by authorized users. The frontend should not store any secret keys.

IAM / Least Privilege

Create different roles/accounts for different purposes. If your system is very large, grouping all users into the same role is never a good idea. For example, you could assign roles like this:

-

presigneraccount - Only allows S3 operations required for creating presigned URLs (CreateMultipartUploadCommand, PutObjectCommand, UploadPartCommand, AbortMultipartUploadCommand, HeadObjectCommand). -

cleanupaccount - Allows DeleteObjectCommand, ListObjectsCommand (this is a Class C operation, use with caution).

Hash Validation

Backblaze does not support configuring the checksum algorithm via x-amz-meta-sha256. If your chosen provider supports this, you can use this field for validation.

After the "Complete" step described below, you can use HeadObjectCommand to get the checksum (if supported).

The Backblaze S3 Client even requires an additional configuration field, requestChecksumCalculation:

export const s3 = new S3Client({

endpoint: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_ENDPOINT!,

region: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_REGION!,

credentials: {

accessKeyId: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_ACCESS_KEY_ID!,

secretAccessKey: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_SECRET_ACCESS_KEY!

},

requestChecksumCalculation: 'WHEN_REQUIRED'

})

Frontend Uploads Using Presigned URLs

We have obtained one or a set of presigned URLs. Now, the frontend takes these URLs and needs to call fetch to upload the user's local file to your object storage bucket.

Note that everything up to this point happens after the user clicks "Confirm Upload." From the user's perspective, it feels like they are uploading the file directly to your server; the process of obtaining the presigned URL is transparent to them.

Below is an example of frontend code for uploading a file using presigned URLs.

Large File Upload

const uploadLarge = async (f: File) => {

uploadStatus.value = 'largeInit'

const initUploadData = {

toolsetId: props.toolsetId,

filename: f.name,

filesize: f.size

}

const result = useKunSchemaValidator(initToolsetUploadSchema, initUploadData)

if (!result) {

return

}

progress.value = 0

const initRes = await $fetch(`/api/toolset/${props.toolsetId}/upload/large`, {

method: 'POST',

body: initUploadData,

watch: false,

...kungalgameResponseHandler

})

if (!initRes) {

uploadStatus.value = 'idle'

return

}

try {

const partSize: number = initRes.partSize

const urls: {

partNumber: number

url: string

}[] = initRes.urls

const parts: {

PartNumber: number

ETag: string

}[] = []

uploadStatus.value = 'largeUploading'

for (let i = 0; i < urls.length; i++) {

const { partNumber, url } = urls[i]

const start = (partNumber - 1) * partSize

const end = Math.min(start + partSize, f.size)

const blob = f.slice(start, end)

const resp = await fetch(url, {

headers: { 'Content-Type': 'application/octet-stream' },

method: 'PUT',

body: blob

})

const etag = resp.headers.get('ETag') resp.headers.get('etag')

if (!etag) {

throw new Error('Missing ETag')

}

parts.push({ PartNumber: partNumber, ETag: etag })

progress.value = Math.round(((i + 1) / urls.length) * 100)

}

uploadStatus.value = 'largeComplete'

const completeUploadData = {

salt: initRes.salt,

uploadId: initRes.uploadId,

parts

}

const result = useKunSchemaValidator(

completeToolsetUploadSchema,

completeUploadData

)

if (!result) {

return

}

const done = await $fetch(

`/api/toolset/${props.toolsetId}/upload/complete`,

{

method: 'POST',

body: completeUploadData,

watch: false,

...kungalgameResponseHandler

}

)

if (done) {

useMessage('Upload successfully!', 'success')

emits('onUploadSuccess', done)

}

} catch (e) {

if (initRes?.uploadId) {

const abortUploadData = {

salt: initRes.salt,

uploadId: initRes.uploadId

}

const result = useKunSchemaValidator(

abortToolsetUploadSchema,

abortUploadData

)

if (!result) {

return

}

await $fetch(`/api/toolset/${props.toolsetId}/upload/abort`, {

method: 'POST',

body: abortUploadData,

watch: false,

...kungalgameResponseHandler

})

}

} finally {

uploadStatus.value = 'complete'

}

}

Small File Upload

const uploadSmall = async (f: File) => {

uploadStatus.value = 'smallInit'

const initUploadData = {

toolsetId: props.toolsetId,

filename: f.name,

filesize: f.size

}

const result = useKunSchemaValidator(initToolsetUploadSchema, initUploadData)

if (!result) {

return

}

const initRes = await $fetch(`/api/toolset/${props.toolsetId}/upload/small`, {

method: 'POST',

body: initUploadData,

watch: false,

...kungalgameResponseHandler

})

if (!initRes) {

uploadStatus.value = 'idle'

return

}

try {

uploadStatus.value = 'smallUploading'

await fetch(initRes.url, {

headers: { 'Content-Type': 'application/octet-stream' },

method: 'PUT',

body: f

})

uploadStatus.value = 'smallComplete'

const completeUploadData = {

salt: initRes.salt

}

const result = useKunSchemaValidator(

completeToolsetUploadSchema,

completeUploadData

)

if (!result) {

return

}

const done = await $fetch(

`/api/toolset/${props.toolsetId}/upload/complete`,

{

method: 'POST',

body: completeUploadData,

watch: false,

...kungalgameResponseHandler

}

)

if (done) {

useMessage('Upload successfully!', 'success')

emits('onUploadSuccess', done)

}

} finally {

uploadStatus.value = 'complete'

}

}

There is a very easy-to-miss issue here. When we upload files on the frontend, we often use new FormData to place the file in a FormData object and send it to the backend. However, in this scenario, this is not a viable approach.

Consider the following situation:

Why is it that after uploading an object to B2, the filesize calculated on the frontend is 15521335, but after the upload is complete,

HeadObjectreturns aContentLengthof 15521549?

Then, when we use HeadObjectCommand on the backend to validate the file size, an error will occur. So why does this happen?

Actually, 15521549 - 15521335 = 214. These 214 bytes are the boundary and header overhead of multipart/form-data.

So, the cause of the error is that we used new FormData and appended the binary file, which led to a validation error (the validation error occurs on our backend when we re-check the file size; the file upload itself will not report an error).

Is FormData a Common Practice for Uploads?

-

Common on the frontend The browser's native

FormDatais indeed the most common way to upload files, especially since traditional backend endpoints naturally supportmultipart/form-data. -

Not common for direct cloud storage uploads Object storage services (S3, B2, OSS, etc.) prefer direct

PUTrequests with the raw binary stream of the file (Content-Type: application/octet-stream). This is because these services are at the storage layer and expect to receive the file itself, not a file wrapped in amultipartpackage.

Does FormData Change the File?

-

The file content itself is not modified. The binary part transmitted within the multipart data, when downloaded, is still the complete original file.

-

But the total size of the stored object will be larger. This is because multipart includes boundary strings and field headers. The storage layer doesn't "automatically strip" these extra bytes; instead, it stores the entire HTTP body as the object.

Therefore, the downloaded object is no longer the user's original file; it contains extra multipart boundary content, unless your download logic can parse it out again (which it usually won't do automatically).

In summary, the upload logic we've written here should not wrap the file in FormData.

Handling File Upload Errors (This process is only for large files)

When a large file upload fails, we perform this action:

await $fetch(`/api/toolset/${props.toolsetId}/upload/abort`, {

method: 'POST',

body: abortUploadData,

watch: false,

...kungalgameResponseHandler

})

This will request the following API endpoint:

import { s3 } from '~/lib/s3/client'

import { AbortMultipartUploadCommand } from '@aws-sdk/client-s3'

import { abortToolsetUploadSchema } from '~/validations/toolset'

import {

getUploadCache,

removeUploadCache

} from '~/server/utils/upload/saveUploadSalt'

export default defineEventHandler(async (event) => {

const userInfo = await getCookieTokenInfo(event)

if (!userInfo) {

return kunError(event, 'Login expired', 205)

}

const input = await kunParsePostBody(event, abortToolsetUploadSchema)

if (typeof input === 'string') {

return kunError(event, input)

}

const { salt, uploadId } = input

const fileCache = await getUploadCache(salt)

if (!fileCache) {

return kunError(event, 'File cache data not found')

}

await s3.send(

new AbortMultipartUploadCommand({

Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

Key: fileCache.key,

UploadId: uploadId

})

)

await removeUploadCache(salt)

return 'Moemoe abort upload successfully!'

})

Here, we mainly call AbortMultipartUploadCommand to interrupt the initial CreateMultipartUploadCommand because the file upload failed.

Why abort? Is it okay not to?

Of course, it's okay. According to the rules set by most object storage providers, file parts uploaded via CreateMultipartUploadCommand will be automatically deleted after about 7 business days if CompleteMultipartUploadCommand is not called to merge them. After that, they will not continue to occupy space in the bucket and incur charges (they are only billed for 7 days).

Here, we use a more elegant and swift solution: AbortMultipartUploadCommand. This will immediately terminate the upload task and clear all file parts instantly. (For Backblaze, deleted files become "hidden files," and these hidden files are billed for one more business day before being deleted, as mentioned in the initial article about modifying bucket information).

What if the user doesn't request the

/api/toolset/${props.toolsetId}/upload/abortendpoint?

That's also fine. We have designed a specific feature for this situation: a cron job based on Redis keys.

Notice that in the API where we initially request the presigned URL, we use Redis to store key information about the file. Here, we can use a cron job, running periodically, to batch-process these orphan files.

Here is an example implementation:

import { DeleteObjectCommand } from '@aws-sdk/client-s3'

import { s3 } from '~/lib/s3/client'

import {

removeUploadCache,

type UploadSaltCache

} from '~/server/utils/upload/saveUploadSalt'

export default defineTask({

meta: {

name: 'cleanup-toolset-resource',

description:

'Every hour, delete S3 objects referenced by toolset:resource keys in Redis.'

},

async run() {

const storage = useStorage('redis')

const keys = await storage.getKeys('toolset:resource')

let deletedCount = 0

for (const key of keys) {

const store = await storage.getItem(key)

const parsedResult = JSON.parse(JSON.stringify(store)) as UploadSaltCache

try {

if (parsedResult && parsedResult.key) {

await s3.send(

new DeleteObjectCommand({

Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

Key: parsedResult.key

})

)

await removeUploadCache(parsedResult.salt)

deletedCount++

}

} catch (err) {

console.error(`Failed to delete object for key ${key}:`)

}

}

return { result: `Deleted ${deletedCount} toolset resources from S3.` }

}

})

Here, we delete all objects from S3 based on Redis keys starting with toolset:resource and remove the cache using removeUploadCache.

The process above ensures that the operation to delete orphan files will definitely be executed within a certain period, avoiding any potential billing.

Note that the cron job we wrote above is very simple. In a large-scale system, sending requests directly to object storage might cause numerous errors. You would need to batch the requests and possibly implement logging of cleanup actions into an audit log for company cost analysis.

When the system becomes very large, the performance of a Node.js server might be insufficient, and the backend architecture might not be cohesive or modular enough. At that point, you should decisively abandon this Node.js server practice and implement the above process in a Go or Rust project, adding features like distributed locks with Redis.

Completing the File Upload

As you can see, regardless of whether it's a small or large file, once the upload is finished, we request the following endpoint:

const done = await $fetch(

`/api/toolset/${props.toolsetId}/upload/complete`,

{

method: 'POST',

body: completeUploadData,

watch: false,

...kungalgameResponseHandler

}

)

The implementation of this API is as follows:

import prisma from '~/prisma/prisma'

import { s3 } from '~/lib/s3/client'

import {

CompleteMultipartUploadCommand,

HeadObjectCommand,

DeleteObjectCommand

} from '@aws-sdk/client-s3'

import { completeToolsetUploadSchema } from '~/validations/toolset'

import {

getUploadCache,

removeUploadCache

} from '~/server/utils/upload/saveUploadSalt'

import { canUserUpload } from '~/server/utils/upload/canUserUpload'

import type { ToolsetUploadCompleteResponse } from '~/types/api/toolset'

export default defineEventHandler(async (event) => {

const input = await kunParsePostBody(event, completeToolsetUploadSchema)

if (typeof input === 'string') {

return kunError(event, input)

}

const { salt, uploadId, parts } = input

const userInfo = await getCookieTokenInfo(event)

if (!userInfo) {

return kunError(event, 'User login has expired', 205)

}

const fileCache = await getUploadCache(salt)

if (!fileCache) {

return kunError(event, 'Failed to retrieve uploaded file cache information')

}

if (uploadId) {

if (!parts !parts.length) {

return kunError(event, 'Part information is missing')

}

const sorted = parts

.slice()

.sort((a, b) => a.PartNumber - b.PartNumber)

.map((p) => ({ PartNumber: p.PartNumber, ETag: p.ETag }))

await s3.send(

new CompleteMultipartUploadCommand({

Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

Key: fileCache.key,

UploadId: uploadId,

MultipartUpload: { Parts: sorted }

})

)

}

const head = await s3.send(

new HeadObjectCommand({

Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

Key: fileCache.key

})

)

/* a response head example

{ '$metadata':

{ httpStatusCode: 200,

requestId: '',

extendedRequestId: '',

cfId: undefined,

attempts: 1,

totalRetryDelay: 0 },

AcceptRanges: 'bytes',

LastModified: 2025-09-26T18:11:39.000Z,

ContentLength: 15521549,

ETag: '"dca682778c0765206314a525e3bb902c"',

VersionId: '',

ContentType: 'application/octet-stream',

Metadata: {}

}

*/

const actualBytes = Number(head.ContentLength 0)

if (!actualBytes || actualBytes !== fileCache.filesize) {

await s3.send(

new DeleteObjectCommand({

Bucket: process.env.KUN_VISUAL_NOVEL_S3_STORAGE_BUCKET_NAME!,

Key: fileCache.key

})

)

await removeUploadCache(salt)

return kunError(event, 'File size validation failed. Please retry or contact an administrator.')

}

const result = await canUserUpload(userInfo.uid, actualBytes)

if (typeof result === 'string') {

return kunError(event, result)

}

await prisma.user.update({

where: { id: userInfo.uid },

data: { daily_toolset_upload_count: result }

})

return {

salt,

key: fileCache.key,

filesize: fileCache.filesize,

dailyToolsetUploadCount: result

} satisfies ToolsetUploadCompleteResponse

})

As you can see, when completing the upload task, if there is an uploadId, it means this was a CreateMultipartUploadCommand task, and we need to use CompleteMultipartUploadCommand to finish it.

If there isn't one, we proceed to the HeadObjectCommand flow.

The HeadObjectCommand Process

This part is the most cleverly designed aspect of the entire validation logic. It perfectly solves the biggest problem: "Is the file the user uploaded really the size they claimed?"

Most of the costs associated with object storage are storage size fees (this is the case with Backblaze, as bandwidth fees are waived when using Cloudflare, and transaction fees for various operations are not as significant as storage costs).

If a user tells the backend "I'm going to upload a 100MB file" but actually uploads a 100TB file, your house will disappear again when you see the bill from your storage provider next month. This is a very scary problem. (一旦用户给后端发 "我要上传一个 100MB" 的文件,实际上却上传了一个 100TB 的文件,那么下个月你当你看到存储供应商给你发的账单,这时你家房就又会消失,这是一件非常可怕的问题)

Our solution is to use HeadObjectCommand to fetch the file's size again after the user has finished uploading. We then check if this size matches the file size the user initially reported to us, which we stored in Redis.

Here is an example response from HeadObjectCommand:

{ '$metadata':

{ httpStatusCode: 200,

requestId: '',

extendedRequestId: '',

cfId: undefined,

attempts: 1,

totalRetryDelay: 0 },

AcceptRanges: 'bytes',

LastModified: 2025-09-26T18:11:39.000Z,

ContentLength: 15521549,

ETag: '"dca682778c0765206314a525e3bb902c"',

VersionId: '',

ContentType: 'application/octet-stream',

Metadata: {}

}

The ContentLength here is the size of the file. By comparing this size with the filesize we stored in UploadSaltCache, we can immediately know if the user really uploaded a 100MB file.

If there is no fileCache, the process will not proceed to the removeUploadCache step for publishing the resource. The key-value pair for this file will remain in Redis forever. However, the cron job will periodically scan for these keys, and the task of deleting these orphan files is handed off to the cron job. So, the spam user trying to make your house disappear cannot exploit this vulnerability either.

If the file sizes do not match, the file is immediately deleted using DeleteObjectCommand.

Handling Details

Some might worry that malicious users could spam HeadObjectCommand by uploading many failed files, thus incurring charges, since it's a Class B transaction and can be abused.

The answer is, probably not (though you can never rule out some determined individuals).

Because GetObjectCommand is also a Class B operation. Why spam HeadObjectCommand when you can spam the much simpler download endpoint? That's an even better way to leverage the high concurrency of servers.

Finalizing the Resource Creation

After the above process is completed, it signifies the end of the user's flow after clicking "Confirm Upload." This will yield an object like this:

return {

salt,

key: fileCache.key,

filesize: fileCache.filesize,

dailyToolsetUploadCount: result

} satisfies ToolsetUploadCompleteResponse

The user can then continue to fill in other fields required for publishing the file resource, such as file notes, usage instructions, etc.

Then, an API endpoint like the one below is called, which directly stores the file's key in the system's own database (while the file itself is stored in object storage). For future downloads, you can simply concatenate the file's key with your download domain to get the file's download link.

import prisma from '~/prisma/prisma'

import { createToolsetResourceSchema } from '~/validations/toolset'

import {

getUploadCache,

removeUploadCache

} from '~/server/utils/upload/saveUploadSalt'

import { isValidArchive } from '~/utils/validate'

import type { ToolsetResource } from '~/types/api/toolset'

export default defineEventHandler(async (event) => {

const userInfo = await getCookieTokenInfo(event)

if (!userInfo) {

return kunError(event, 'Login expired', 205)

}

const input = await kunParsePostBody(event, createToolsetResourceSchema)

if (typeof input === 'string') {

return kunError(event, input)

}

const { toolsetId, content, code, password, size, note, salt } = input

const fileCache = await getUploadCache(salt)

if (fileCache && !isValidArchive(fileCache.key)) {

return kunError(event, 'Invalid file extension')

}

const toolset = await prisma.galgame_toolset.findUnique({

where: { id: toolsetId },

select: { id: true }

})

if (!toolset) {

return kunError(event, 'Toolset resource not found')

}

const user = await prisma.user.findUnique({

where: { id: userInfo.uid },

select: {

id: true,

name: true,

avatar: true

}

})

if (!user) {

return 'User not found'

}

const newResource = await prisma.$transaction(async (p) => {

const res = await p.galgame_toolset_resource.create({

data: {

content: salt && fileCache ? fileCache.key : content,

type: salt ? 's3' : 'user',

code,

password,

size: salt && fileCache ? fileCache.filesize.toString() : size,

note,

toolset_id: toolsetId,

user_id: userInfo.uid

}

})

await p.galgame_toolset.update({

where: { id: toolset.id },

data: { resource_update_time: new Date() }

})

await prisma.user.update({

where: { id: userInfo.uid },

data: { moemoepoint: { increment: 3 } }

})

await p.galgame_toolset_contributor.createMany({

data: [{ toolset_id: toolsetId, user_id: userInfo.uid }],

skipDuplicates: true

})

return res

})

await removeUploadCache(salt)

return newResource satisfies ToolsetResource

})

As you can see, in the end, we call removeUploadCache to delete the cache from Redis.

This is because at this point, we are 100% certain that the user has successfully created and published the file resource. Only now can we confidently remove our safety mechanism.

Going Further

In addition to everything mentioned above, we can also envision the following solutions.

Resumable Uploads

Sometimes, a user's network connection is interrupted during a file upload. If the user is uploading a large file with low upload bandwidth, they might get very frustrated with the website's developers.

Our project's design is for uploading small tools on a forum, so we haven't implemented resumable uploads. However, we can provide the basic design idea:

-

The frontend saves the

ETagof already uploaded parts (e.g., in IndexedDB) to support resumption. -

The backend receives the list of parts during

CompleteMultipartUploadand merges them in order (which the current implementation already includes). -

If both the frontend and backend restart/fail, the state needs to be recoverable via the

uploadId+ DB/Redis status.

Some large projects use a dedicated table to store the upload status of files. This is more robust than using a Redis cache and supports more advanced operations, such as restoring state for resumable uploads.

Also, if your project requires upload auditing, such as detailed information on users' average upload times, success rates, etc., then you will definitely need this table.

Risk Control

Implementing a risk control system is an extremely complex task. Every risk control system has at least its own set of complex algorithms for judging user behavior.

However, you can implement a crude risk control system with some simple operations to apply certain rate limits to users.

Monitoring Metrics

For the design of a large system, your team lead might ask you to design the following metrics:

Request counts for common operations like GetObjectCommand, HeadObjectCommand, presigned request rates, daily download/upload bytes per user, and access statistics for popular files.

You can use these metrics to integrate with an alerting system, such as the currently popular OpenTelemetry + Tempo + Loki architecture.

On the frontend, you can easily add a CAPTCHA (for example, our choice is "all the white-haired girls") or integrate reCAPTCHA.

By the way, our current project has no logging or monitoring system. Since it's not an enterprise-level project and doesn't generate profit, our main principle is: we don't audit. If it crashes, we restore the database. So sue me.

Unit Testing

The process described above is actually a closed loop. you can easily write tests for the entire lifecycle of a file from creation to deletion.

Downloading Files

All of the content above hasn't mentioned much about how to download files. In fact, you can use getSignedUrl to get a temporary download link (as suggested earlier, 1 hour is appropriate, but you can change it to 2 hours if you think users' internet speeds are slow).

Temporary links are more secure. Imagine a user sharing your website's download link in some groups, leading to tens or hundreds of users downloading directly. This could be detrimental to your own advertising or traffic revenue.

We currently just concatenate the key and domain to play the good guy. Can't be helped, since we're not profitable.

Hotlink Protection

The method for hotlink protection is explained in detail in the article we mentioned at the beginning, Using Cloudflare Workers to Download Files from a B2 Private Bucket, so we won't repeat it here.

At the level of a user clicking to download on the frontend, you can use the presigned URL generation method mentioned in the article to hide the file key. This pairs well with a private bucket + workers, making your real download link transparent to everyone from start to finish.

DMCA / ToS

If you receive a DMCA notice by email, just delete the content. Never fight a DMCA notice.

If your website's scale and download volume are large enough to violate the ToS of providers like Backblaze or Cloudflare, it means you should upgrade to their enterprise plan.

Summary

Through the process described above, we have completely implemented the entire flow of a resource from user upload to publication.

Combined with the two articles we initially provided, this should enable you to design a robust, secure system for publishing and downloading resources that can support most websites.

If your system is too large and you're unsure how to design it, you can directly join our Telegram development group at https://t.me/KUNForum to contact us.

*

*